FaceApp: A Symptom of a Much Deeper Problem

If there is a silver lining to the FaceApp scandal, it’s that we’ve all learned definitively: our faces are targets.

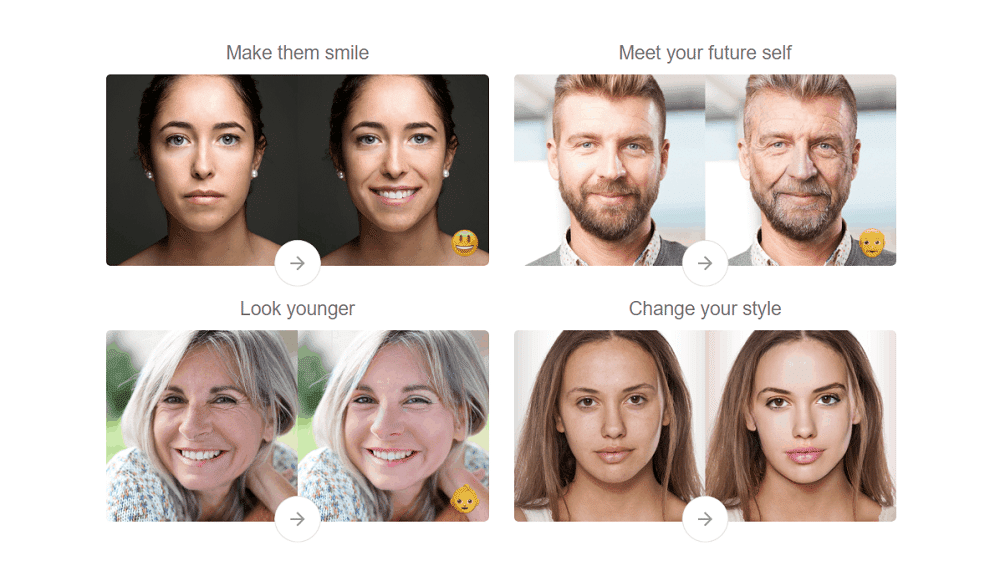

This week, prominent politicians and major news outlets picked up on the emerging FaceApp scandal – the latest in a string of incidents in which facial images were scraped, collected and analyzed without consent. The vocal calls for congressional investigations and warnings of national security violations brought home what security professionals and facial recognition experts have been saying all along: our faces are compromised.

It’s not a new story. In May of this year, it was Ever app – the photo storage service that was using its users’ photos to train facial recognition software and then selling them to law enforcement. Before that, IBM was discovered to have been using Flickr photos to train facial recognition applications without permission from those in the photos.

And just last month we learned that ICE (the Immigration and Customs Enforcement division of the US Department of Homeland Security) had been mining state driver’s license databases using facial recognition technology, analyzing millions of motorists’ photos without their knowledge.

And if the government is doing it, who’s going to stop the bad guys?

The Irony of Facial Recognition

It is truly ironic. For years we struggled with passwords – coming up with new ones every few months, remembering which one to use for which service, recalling our mother’s maiden names and those of our first pets.

Then biometric authentication – and specifically facial recognition – became widely available, and we thought it was the end of our password problems. What could be easier? Your face is unique – the thinking went – and it’s always there. So, who needs passwords?

But in fact, we forgot one important thing: by using our faces as passwords we were literally writing our passwords on our foreheads. And unlike the old world, where passwords can be updated, we can’t change our faces.

Or can we?

So, What Can We Do?

Faces can’t be changed, except by plastic surgery that most of us would prefer to avoid. But the images of our faces can be protected from misuse – which is the key to continuing and effective use of facial recognition for access control.

We can protect our facial images by making them unrecognizable to facial recognition software, while still keeping them similar to human eyes. And we’re not talking about the “brute force’ methods of the past – blurring, pixelation or face swapping. Advanced technology can create a new, unique, unrecognizable photo of yourself which looks the same, yet does not have your “faceprint’ – those key features that AI-based face recognition tools look for to determine who you are.

D-ID enhances privacy by removing unnecessary sensitive biometric data from facial images. Completely seamless and transparent, a D-ID protected photo looks no different to the human eye. Yet D-ID photos cannot be decrypted or reverse-engineered – outsmarting even the most advanced facial recognition engines. This means that facial recognition software cannot match a D-ID-protected photo to any identity – whether it’s the bad guys or the good guys trying to do so.

So now we know

Our faces – arguably the most personal and sensitive of our biometric data – have become targets. And until now we’ve all willingly handed them over to anyone who asks. But now we know the risk, and now we know the way to reduce it.